Picture this: you’re having a late-night conversation with ChatGPT about your business strategy, personal finances, or maybe even relationship troubles. You assume it’s private, just between you and the AI. Then you discover that not only is every word being stored indefinitely, but a court order now prevents even your “deleted” conversations from actually disappearing.

Welcome to the complicated world of data privacy in 2025, where ChatGPT has exploded to over 180 million users and 600 million monthly visits, but the rules about your information have fundamentally changed. What started as concerns about AI training on user data has evolved into a full-blown legal battle that’s upended everything you thought you knew about digital privacy.

If you’ve been using ChatGPT without thinking twice about what happens to your conversations, you’re not alone. Most users have no idea that recent court orders, policy changes, and corporate data practices have created a perfect storm where your most private AI interactions might be far less private than you ever imagined. The stakes couldn’t be higher, especially as data privacy becomes the currency of the digital age.

What ChatGPT Actually Collects From You

ChatGPT’s data collection practices are extensive and go far beyond just your conversations. Every query, instruction, or conversation with ChatGPT is stored indefinitely unless you manually delete it. This includes everything from casual questions about recipes to sensitive business strategies you might inadvertently share.

When you interact with ChatGPT, the platform collects your prompts and responses, along with technical information like your IP address, browser type, timestamps, and device details. They also track how you use the platform, when you’re active, and your interaction patterns. The most significant change in 2025 came from a policy update that removed the ability for free and Plus users to disable chat history entirely, representing a major shift away from user data privacy control.

The 2025 Legal Bombshell That Changed Everything

Data privacy took a dramatic turn in 2025 when The New York Times sued OpenAI, demanding that the company retain all consumer data indefinitely. A court order now forces OpenAI to keep deleted ChatGPT chats that would typically be removed from their systems within 30 days. While OpenAI is actively appealing this decision, it’s currently the reality for millions of users concerned about data privacy.

This legal development has created a confusing patchwork of retention policies. Free and Plus users are subject to the court order, meaning their data gets retained indefinitely. Enterprise and Team users maintain better control, with conversations removed within 30 days unless legally required otherwise. This creates a clear divide in data privacy protections based on what you’re paying.

What Privacy Controls You Actually Have

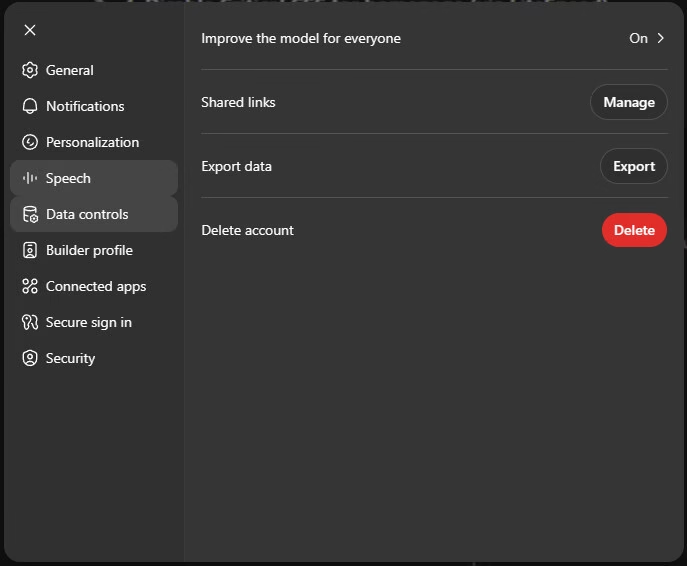

Despite these legal challenges, OpenAI does maintain some data privacy controls, though they’re not as robust as many users believe. To access these controls, click your profile avatar in the top right corner and select “Settings,” then choose “Data controls” from the left sidebar.

Here’s what you can actually control. Under “Improve the model for everyone,” you can turn this setting off to prevent your conversations from being used to train AI models. You’ll also find options to “Export data” to see everything ChatGPT has collected about you, and “Delete account” for complete removal (which permanently bans your email and phone number).

including opting out of AI training and data export options.

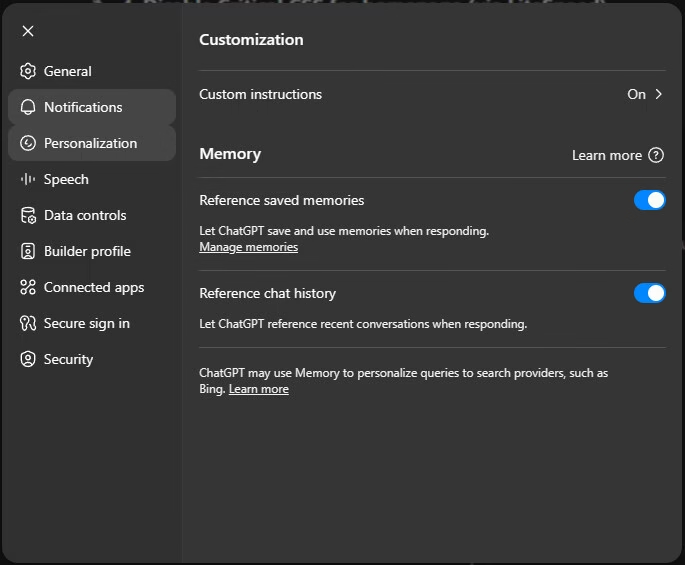

In the Personalization section, you can manage “Memory” settings that control whether ChatGPT saves and uses memories when responding, and “Reference chat history” which lets ChatGPT reference recent conversations. However, there’s a critical reality check: due to the ongoing New York Times lawsuit, OpenAI is currently required by court order to retain all consumer data indefinitely, including chats you delete or mark as temporary. While OpenAI is actively appealing this order and calling it “an overreach,” your deleted conversations are not actually being deleted right now, regardless of your privacy settings.

Why ChatGPT Fails European Privacy Standards

One of the most concerning aspects of data privacy in 2025 is ChatGPT’s ongoing failure to comply with GDPR and similar frameworks. As of February 2025, ChatGPT remains non-compliant due to indefinite retention of user prompts, which directly conflicts with GDPR’s data minimization requirements.

The statistics paint a sobering picture: a 2024 EU audit found that 63% of ChatGPT user data contained personally identifiable information, while only 22% of users were aware of opt-out settings. This means most users are unknowingly sharing personal details that could potentially be traced back to them, despite claims about data privacy protection.

The Privacy Divide Between Users and Businesses

Here’s an uncomfortable truth about data privacy: if you’re paying for business plans, you get significantly better protection than regular users. Companies using ChatGPT Enterprise or Team plans enjoy privileges that individual users simply don’t have access to. Their conversations aren’t used to train AI models, they can set their own data deletion schedules, and they receive legal protections that individual users don’t get.

As a regular user, you’re essentially subsidizing ChatGPT’s development with your data. Your conversations help train the AI that businesses then pay to use with superior data privacy protections. It’s a two-tiered system where money buys privacy, and free users get less control over their information.

Real World Risks You Should Know About

The practical implications of data privacy extend into real-world scenarios that affect everyday users. In 2023, a quarter million OpenAI logins were found for sale on the dark web, highlighting how vulnerable user accounts can be. The more sensitive information you’ve shared in your chat logs, the more dangerous it becomes if your account information falls into the wrong hands.

Major corporations have learned painful lessons through high-profile incidents. Samsung experienced an accidental source code leak when employees shared confidential information with ChatGPT, while Amazon had to warn employees against sharing internal data after discovering ChatGPT responses that resembled their proprietary information. Perhaps most concerning is OpenAI’s policy stating they can transfer your data to government authorities, adding another layer of data privacy concern.

Protecting Yourself

Given the current challenges, the most effective data privacy protection strategy is simply not sharing sensitive information with the platform. OpenAI stores your conversations for some period regardless of your settings, making it possible for information to be accessed by bad actors in security breaches.

Never share personal identifiers like Social Security numbers, full names, or addresses. Avoid uploading documents containing sensitive business information, and use pseudonyms when discussing people or companies. Most importantly, consider any information you share with ChatGPT as potentially public, regardless of your privacy settings.

The decision whether to create an account involves weighing convenience against data collection. Without an account, you don’t provide an email address, but you lose access to privacy controls. If you do create an account, click your profile avatar in the top right corner, select Settings > Data controls, and turn off “Improve the model for everyone” to prevent your data from training future AI models. You can also manage your Memory settings under Personalization to control how much ChatGPT remembers about your conversations.

Alternatives for Privacy Conscious Users

If ChatGPT’s practices are deal-breakers for your data privacy needs, several alternatives prioritize user protection. Privacy-focused AI platforms often feature local processing, stricter no-data-retention policies, or enhanced encryption, though they typically lack some of ChatGPT’s advanced capabilities.

For businesses specifically, Microsoft’s Azure OpenAI Service offers enhanced controls where OpenAI has no access to inputs and outputs, though Microsoft still monitors data to prevent abuse. This represents a middle ground between ChatGPT’s data collection and complete local processing.

Making the Right Choice for You

Data privacy in 2025 presents a complex landscape where convenience often comes at the cost of protection. The recent court order requiring indefinite data retention for consumer users, combined with ongoing GDPR non-compliance, means users must be more cautious than ever about what they share.

For casual users seeking AI assistance with non-sensitive tasks, ChatGPT remains a powerful tool with reasonable controls if properly configured. However, for businesses handling confidential information or individuals with strict requirements, enterprise solutions or privacy-focused alternatives may be more appropriate.

The key is understanding exactly what data gets collected, how it’s used, and what controls you actually have. By taking proactive steps to limit sensitive data sharing and utilizing available privacy controls, you can enjoy AI assistance benefits while minimizing risks.

Remember that in the world of AI, your data privacy is ultimately your responsibility. Stay informed, review your settings regularly, and don’t hesitate to explore alternatives if ChatGPT’s current practices don’t meet your needs. The AI revolution will continue, but it doesn’t have to come at the expense of your privacy.

Footnotes

- OpenAI Blog: New ways to manage your data in ChatGPT ↩

- ChatGPT and Data Privacy – Tripwire ↩

- Does ChatGPT Have Privacy Issues? – MakeUseOf ↩